ChatGPT is getting tons of press. It is well deserved. It's a major step forward. About half our clients are using it in some capacity. The skill in extracting the best results from these tools is incredibly valuable, and may become a new job title soon. "Artificial Intelligence Interpreter"

Quality Teacher

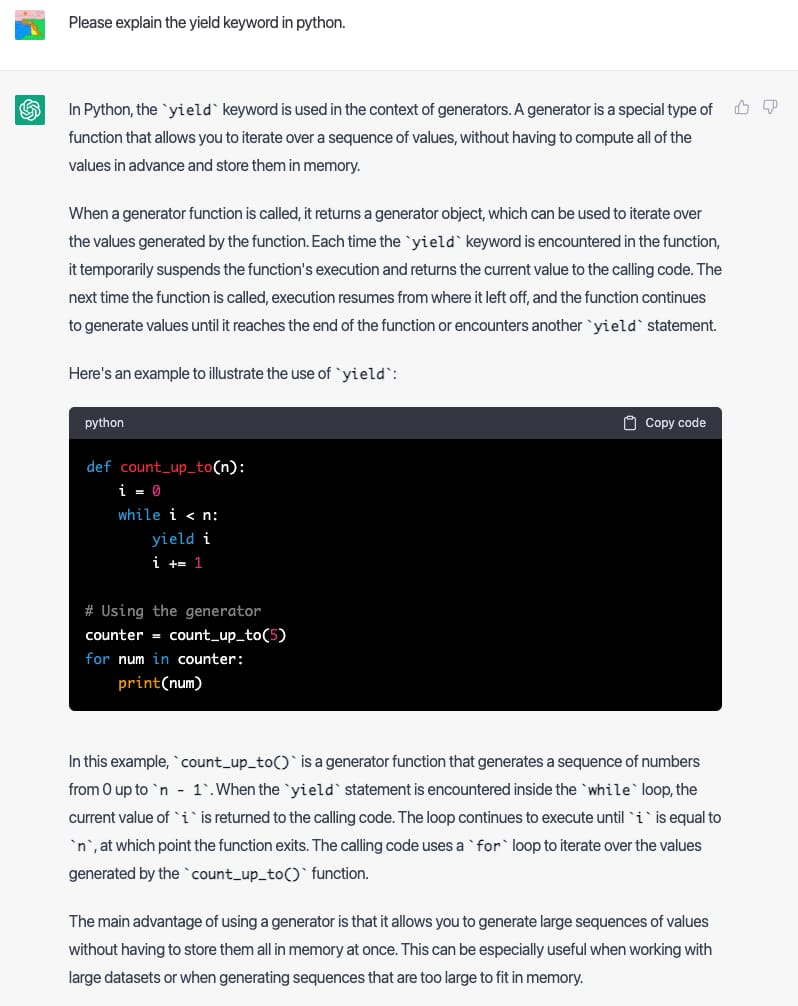

First and foremost, ChatGPT is an exceptional teacher. It synthesizes concepts and presents them in a novel way. It is so clear and lucid. I've been writing python for over a decade. I've studied its quirks and special concepts. I've probably thought about the yield keyword for hundreds of hours. I've read dozens of explanations. Here is the excerpt from my conversation with ChatGPT:

The program gives the most lucid explanation I've ever read. It is a better explainer than any human author, teacher, engineer I have ever encountered. The explanation is 100% factual, complete, clear, logical, concise. I could not formulate a better answer to the question. I suspect it is especially well tuned for computer science topics, but I've seen this level of expertise in other areas as well. It is impressive.

Hallucinations and Confidence

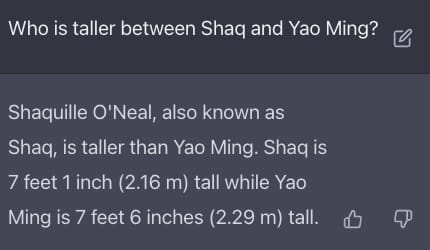

ChatGPT is prone to hallucinations though. In this context a hallucination is a statement of fact that does not reflect reality. Many of the discovered and publicized hallucinations have been fixed. Here is one popular one:

In this case, it has the facts correct, but the interpretation and exposition of the word "taller" is incorrect. The piece I want to focus on here is the authority with which the information is presented. It states Shaq is taller with absolute certainty. This is dangerous when the user is not informed or equipped to validate the information provided by ChatGPT. Many scientific and data applications use confidence levels and metrics to orient the user. If this was employed in hedging language or equivocation, that could be a way to signal to the user that maybe it doesn't know the answer for sure.

Turing Test

Alan Turing proposed his test in "Computing Machinery and Intelligence" in 1950 and has long been held as an important standard to determine the efficacy of machine learning and artificial intelligence models. Simply put, a human (labeled the interrogator) is required to chat in text chat with an agent. The interrogator is to decide if the agent is a human or an artificial intelligence. If an artificial intelligence convinces the interrogator that he is speaking with another human agent, the computer has "passed" the test. I think with some small tweaks that remove its exposing itself when asked, ChatGPT would likely pass with flying colors.

In my opinion, this is irrelevant. It doesn't matter if we know we're talking to a human or a computer. We live in a world where much of our consciousness and attention is directed by automation and computed systems. I personally don't care or even spend time thinking about it. What matters is the quality of the information, the quality of where our attention gets directed. And given the example above with Python yield, it's clear Chat GPT is super human at this. The state of the art in this field is not being properly appreciated by many, and I think society will see the implications of this not at all and then all at once.

Final Thoughts

If you have an application that might benefit from the use of Chat GPT and need an expert user of the system to guide its build and mold the prompts, reach out to us. We're about to see some major restructuring of society based on these powerful tools. I hope you find yourself on the right side of the keyboard.